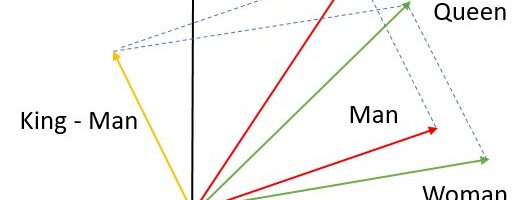

An article by Plotly1 shows how the main analogy for what embeddings represent is a bit shaky. It sounds good and works like the analogies logic from the SAT. But it only kind of works.

I was reading all this stuff about embeddings and it’s really a surprising feature that you could do simple vector math to solve analogies. At first I assumed that the major trained models must have been trained with analogies. You really could create layers that subtract and add embeddings and do supervised learning on a known set of analogies, training the embeddings to represent gender with a single, similar vector. But nobody does this. It seems like just an amazing emergent feature of training the hidden layer we get the embeddings from!

It kind of works. But not quite, and it takes a bit of cheating.2 Taking an embedding vector for King, subtracting the vector for Man and adding the vector for Woman makes a new vector. But the cosine distance isn’t far enough away from King. You have to exclude King, Man, and Woman in order to make this vector close to the embedding vector for Queen.

I tried this myself with OpenAI’s modern embedding model, “text-embedding-3-small”.3 It gives the same results. The closest vector is still King. There’s a lot of error even if you exclude King from the possible results. The embeddings themselves don’t store good analogy information in just linear vector math. The hidden layers and attention heads will still have to do to make the embeddings into something really useful. It’s neat that we get somewhat close values and it shows something is encoded in these embeddings. The results just don’t work exactly as I’ve been reading and what I’ve been taught about embeddings.

This slightly disappointing result intuitively make sense to me. The model wasn’t trained to make vector addition a feature of its values. It’s a bunch of information ready for transfer learning. And what other information is in there? Embedding vectors encode a lot more information, maybe important nuances and maybe extraneous values, in the vector values than you would want in a space made for analogies.

- Plotly Graph, “Understanding Word Embedding Arithmetic: Why there’s no single answer to “King − Man + Woman = ?”. https://medium.com/plotly/understanding-word-embedding-arithmetic-why-theres-no-single-answer-to-king-man-woman-cd2760e2cb7f, 2020 ↩︎

- Florian Huber, “King – Man + Woman = King ?”. https://blog.esciencecenter.nl/king-man-woman-king-9a7fd2935a85, 2019 ↩︎

- Code to try this with OpenAI’s embeddings: https://github.com/seanmcnealy/seanmcnealy_samples/blob/master/embeddings.py ↩︎